This is the second and final post covering our key takeaways from Unite 2015. Click here to view the first post.

Greg Rogers, Design and Narrative Lead; Perspective Virtual Reality VR is a major buzzword in the game industry right now. Headsets, including the Oculus Rift, HTC Vive, Google Cardboard, and Playstation VR (among others), are ramping up to flood the market and usher in a new age of immersion in games. There were several talks (almost too many) at Unite 2015 focused on virtual reality and how this new subset of the gaming medium presents challenges that have not yet been encountered by designers. The most attractive feature about VR headsets is, obviously, that they completely immerse players in the game world; no longer are games on a flat screen, but instead players are made to feel as though they have been inserted into the game space.

Nicole Lazzaro says we need "VR design leadership, not management." It's about the experience. pic.twitter.com/ykhOWjYqCB

— Tesseract @ Uark (@TesseractUA) September 21, 2015

Out of the many talks one of the most prevalent themes was that as a game designer, one must understand (and in some cases relearn) techniques and tropes that many gamers take for granted. Being totally immersed in a 3-D experience plays tricks on the mind. A poorly designed experience can cause bouts of motion sickness due to the conflicted messages received by the brain between the eyes’ visuals and the body’s physical experience. One phrase that stood out to me was that moving VR game design forward will require “Design leadership, not Design management.” What this means is that designers cannot simply recycle old experiences into headsets; instead they must come to terms with a new set of design techniques that specifically take advantage of the VR sub-medium. Most of these techniques revolved around creating a “natural” environment for players. This includes many techniques. If a User Interface or Heads-Up Display must be present, embedding it into the game’s space (sometimes using this to naturally frame the center of the “screen”) helps players to accept immersion and can give them a frame of reference. Providing control schemes that allow players to make natural motions with their arms and hands helps avoid a disconnect of signals between what the body is feeling and what it is seeing.

And often it is better to prefer subtleness to bombastic display, both in the level space players explore and the visceral nature of violence. Many games and demos that I saw featured the player either being stationary or being moved along a predetermined path; the focus of interactivity was not necessarily on where the player goes, but what he or she chooses to interactive with in the game space. It was also mentioned that events appear and feel more real in VR; for example seeing a dead body in virtual reality is much more likely to trigger psychological phobia than seeing it on a screen (however this same principle can be applied to the opposite side of the spectrum with therapeutic and stress-reducing effects).

I was able to play a demo on the Playstation VR that really impressed upon me how this new type of interactivity could drive new types of immersion. In the demo, I assumed the role of a passenger in a car locked in a high-speed, shootout pursuit. Motorcycles and ominous black SUVs surrounded my vehicle and fired bullets that felt and sounded like they were whizzing right past my head. Amidst the chaos, the car’s driver tossed an uzi onto the dashboard in front of me and told me to fight back. Using Playstation Move controllers for input, I was able to manipulate each of my hands independently, truly making me feel like I existed in this blockbuster-esque fantasy. I reached forward and grabbed the gun by pulling a trigger on the back of my right-hand “control wand” and began to fire back. Soon, however, I was out of ammo and as I continued to pull the trigger, nothing happened; the game didn’t hold my hand and automatically reload for me. I looked around the car desperately and found a bag of ammo clips sitting between myself and the driver. I reached to the side with my other hand, picked up a clip and then brought my two hands together to reload and continue fighting. It was an extremely natural motion, an experience I’ve never quite had in all my years of gaming.

Chloe Costello, Art Director; Perspective

Virtual Reality

Unite 2015 brimmed with presentations and buzz about virtual reality games. I love the prospects of VR, and I ended up being pleasantly surprised by the new content developers presented. I especially enjoyed a wonderful talk covering Owlchemy Labs’s new virtual reality game Job Simulator. The game takes place in a world where robots have replaced all jobs. Humans realize that they miss the experience of working, so Job Simulator is a way to address their nostalgia. Or, perhaps, give them a fantasy world in which they can throw hot dogs and shoot staples across the room.

A HUMAN-MADE CATASTROPHE IN JOB SIMULATOR.

Last day of #Unite15. A little race car action. #unity3d #VR #oculus pic.twitter.com/28oZzXLi5M

— VirZOOM (@VirZOOM) September 23, 2015

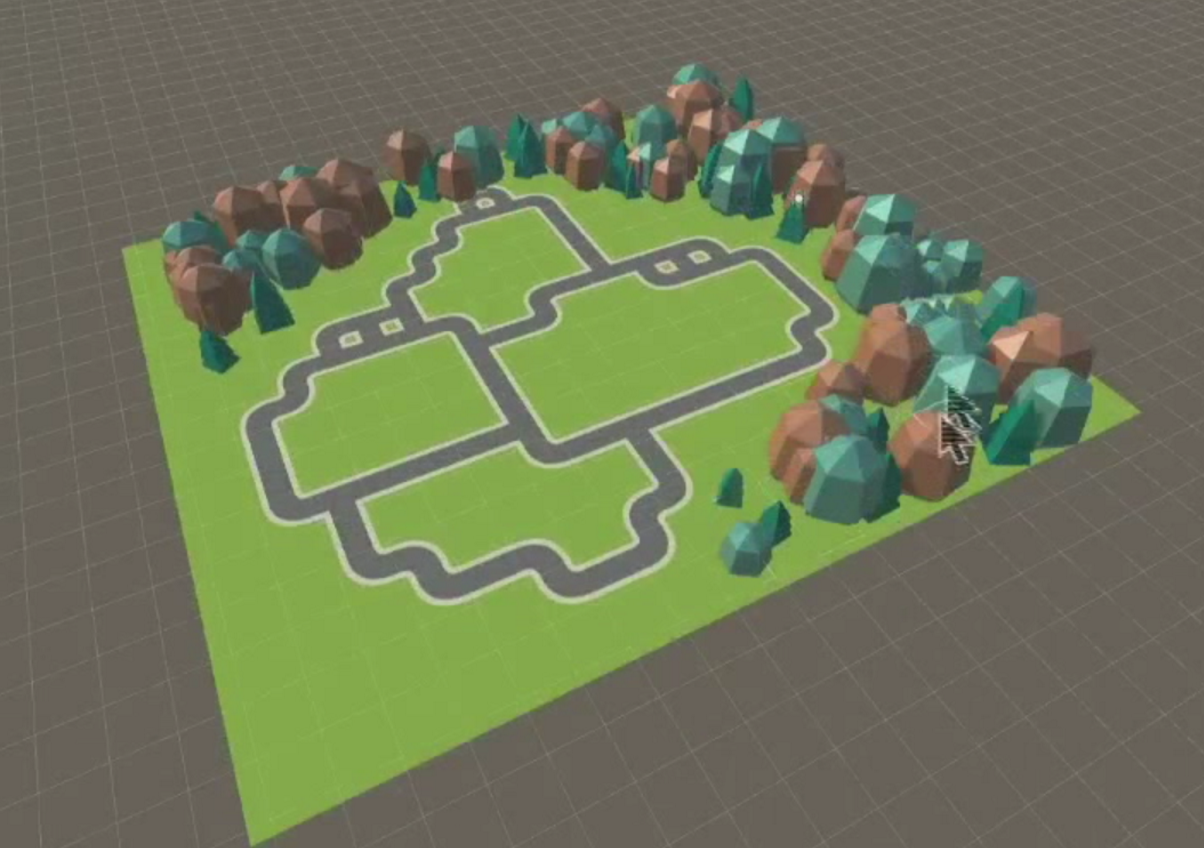

Unity’s 2D tools

At the conference, Unity announced that in a future update they will be including a new suite of 2D tools. I do not work in 2D as much as 3D, so I’m more excited for these tools because of their applications to the 3D workflow. Near the end of Unity’s talk on these tools, the presenter Andy Touch starts discussing the power of the new tile maps and brushes!

I CAN MAKE BRUSHES TO PAINT WITH MODULAR OBJECTS! HOW COOL.

3. For full room tracking, you will need to have at least 2 positional trackers. Oculus only comes with one, but you can supposedly connect a 2nd one. Just be sure to place them in such a way that one hand doesn’t occlude the other. The HTC Vibe will be using laser trackers that purposely take an entire room into account in terms of trackable space.

4. Playtest like you have never playtested before. Since VR in still in the wild west phase, you cannot assume or be dogmatic about what you think will work or doesn’t work. Try Everything! Get your ego out of the way. Case in point, Owlchemy discovered almost by accident that they could have the virtual hands disappear when they grab an item so long as the item itself tracked the hand positions. This means Owlchemy does not have to create a variety of hand grabbing animation allowing them to prioritize their time in other areas.

To bring the point home even further for how important hand tracking will be, I got to test out the Playstation VR (formerly Morpheus) in a car chase scene. It was a great visceral experience being able to use your hands in virtual space. But it’s only the beginning. We can certainly be looking forward to some excellent unique experiences as VR technology grows and matures.

—

Looking forward to future UNITEs, our hope is to see more in-depth content that illustrates Unity’s features through concrete examples from published games. It’s fine if some of this is quite technical, but it should not primarily recycle what’s in the Unity User’s Guide. There is an array of great resources on basics through Unity’s site alone (http://unity3d.com/learn), so UNITE sessions should be used to spotlight underutilized resources or especially helpful (but not obvious) workflows, and show inventive work by indie developers. We’d also like to see a wider variety of topics covered--VR and AR are significant, but too much focus on these, with some overlapping content between sessions, narrowed the scope of the conference a bit. Paradoxically, we feel that Unity could benefit now by dedicating sessions to great level design, narrative, music, and art direction first, and allowing the supporting role played by Unity in making it happen to peer around the edges, rather than command center stage. Might it also be time...god help us...for a UNITE in Austin? Or...Chicago?

3. For full room tracking, you will need to have at least 2 positional trackers. Oculus only comes with one, but you can supposedly connect a 2nd one. Just be sure to place them in such a way that one hand doesn’t occlude the other. The HTC Vibe will be using laser trackers that purposely take an entire room into account in terms of trackable space.

4. Playtest like you have never playtested before. Since VR in still in the wild west phase, you cannot assume or be dogmatic about what you think will work or doesn’t work. Try Everything! Get your ego out of the way. Case in point, Owlchemy discovered almost by accident that they could have the virtual hands disappear when they grab an item so long as the item itself tracked the hand positions. This means Owlchemy does not have to create a variety of hand grabbing animation allowing them to prioritize their time in other areas.

To bring the point home even further for how important hand tracking will be, I got to test out the Playstation VR (formerly Morpheus) in a car chase scene. It was a great visceral experience being able to use your hands in virtual space. But it’s only the beginning. We can certainly be looking forward to some excellent unique experiences as VR technology grows and matures.

—

Looking forward to future UNITEs, our hope is to see more in-depth content that illustrates Unity’s features through concrete examples from published games. It’s fine if some of this is quite technical, but it should not primarily recycle what’s in the Unity User’s Guide. There is an array of great resources on basics through Unity’s site alone (http://unity3d.com/learn), so UNITE sessions should be used to spotlight underutilized resources or especially helpful (but not obvious) workflows, and show inventive work by indie developers. We’d also like to see a wider variety of topics covered--VR and AR are significant, but too much focus on these, with some overlapping content between sessions, narrowed the scope of the conference a bit. Paradoxically, we feel that Unity could benefit now by dedicating sessions to great level design, narrative, music, and art direction first, and allowing the supporting role played by Unity in making it happen to peer around the edges, rather than command center stage. Might it also be time...god help us...for a UNITE in Austin? Or...Chicago?