Virtual Pompeii

Understand and experience the ancient Roman city of Pompeii through the integration of art, spatial analysis, and the immersive exploration of 3D models

Project Overview

Virtual Pompeii (VRP) is a multidisciplinary, data-centered project focused on exploring the intersection of spatial configuration, artwork, movement, and social behavior in the ancient Roman city. How did people move through these houses? What role did the houses’ frescoes and mosaics play in shaping movement? Developing predictive models for movement using network analysis and visual integration studies, VRP then puts these models to the test by having human subjects today navigate a set of houses digitally reconstructed in the game engine Unity. This produces new data that helps us hypothesize and interpret the Roman experience of Pompeii.

What kind of decoration is found in what kind of space, and how does it impact movement and behavior? This is one of our central questions, and to approach this question, VRP researchers have created a transformative search tool that allows the distribution of motifs, styles, and themes in a digitized archive of Pompeian art to be tracked against location and spatial type. Building on the ability to allow a global community of “players” to explore the set of virtual houses created in a game engine, the project looks forward to developing more complex game-based scenarios to test hypotheses about behavior in these houses, using 21st-century game technology and design as an heuristic practice for exploring the past.

Experimental Modeling and Player Testing in Virtual Environments

VRP’s interactive 3D models are developed in the Unity game engine, and can be explored directly through browsers using webGL--no plugins or downloaded media are required, and distribution can be direct and global. Guided by first-hand visits and documentation in Pompeii, as well as the evidence found in the multi-volume sets Pompei: pitture e mosaici and Häuser in Pompeii, these models represent faithful, but necessarily hypothetical reconstructions of the appearance of these houses before their destruction in 79 CE.

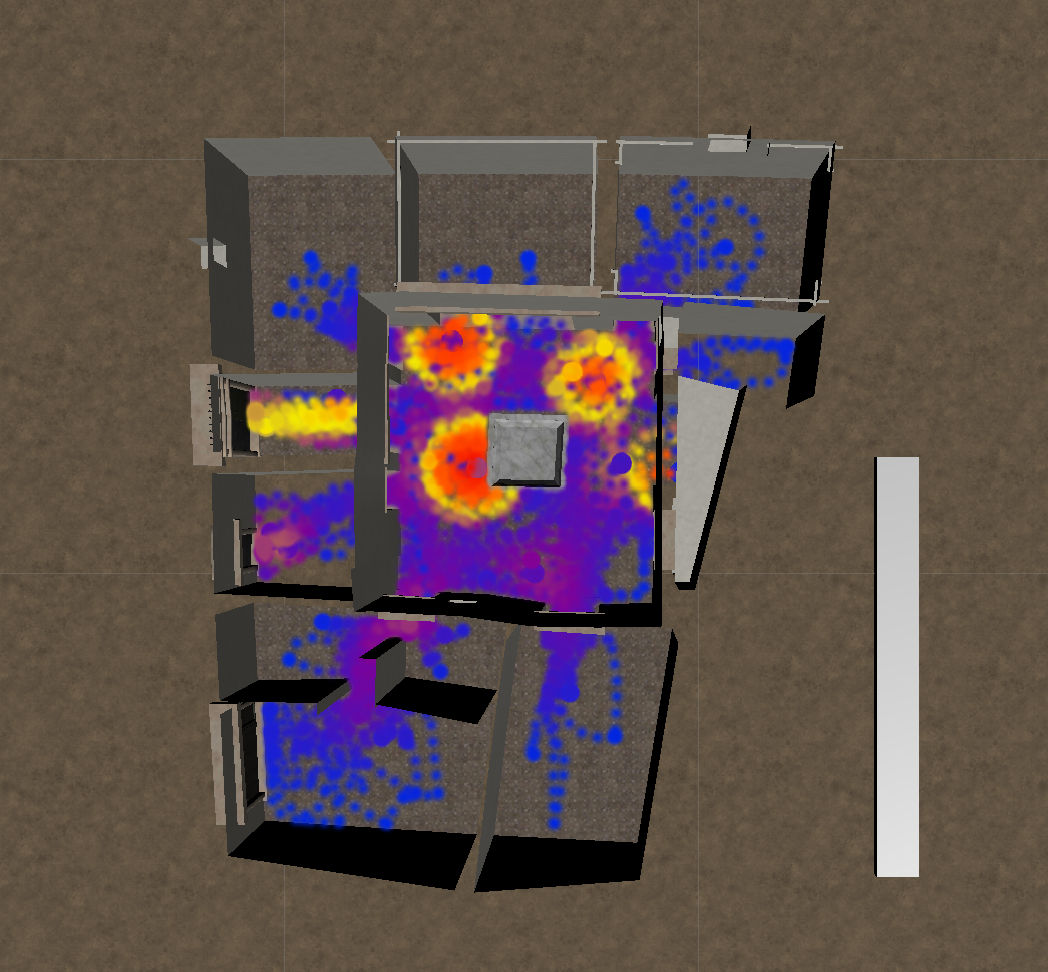

Through a custom-coded feature set in the Unity application, VRP records the movement patterns and gaze behavior of players as they navigate the models. This is then compared to the movement patterns predicted through spatial network and visibility graph analysis. Preliminary results suggest, in fact, that decoration - a factor not considered in traditional space syntax - has a significant impact on human movement through these spaces. At the same time, VRP also collects qualitative data through response forms administered as part of the navigation experiment.

The forms are designed to develop a picture of the cognitive and emotional experience of exploring these houses. While we cannot bring the original inhabitants of Pompeii back to life, nor collect this kind of data from the movement of tourists through the actual structures in Pompeii, the (virtual) embodied experiences of living humans moving through this past environment can be powerful indirect evidence for the experience of these houses by ancient Romans. For this, it is critical that the player community be large and diverse. Hence we call our approach of using global, crowd-sourced testing of virtual environments in archaeology “diverse proxy phenomenology.”

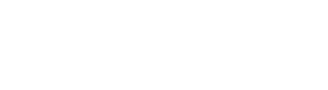

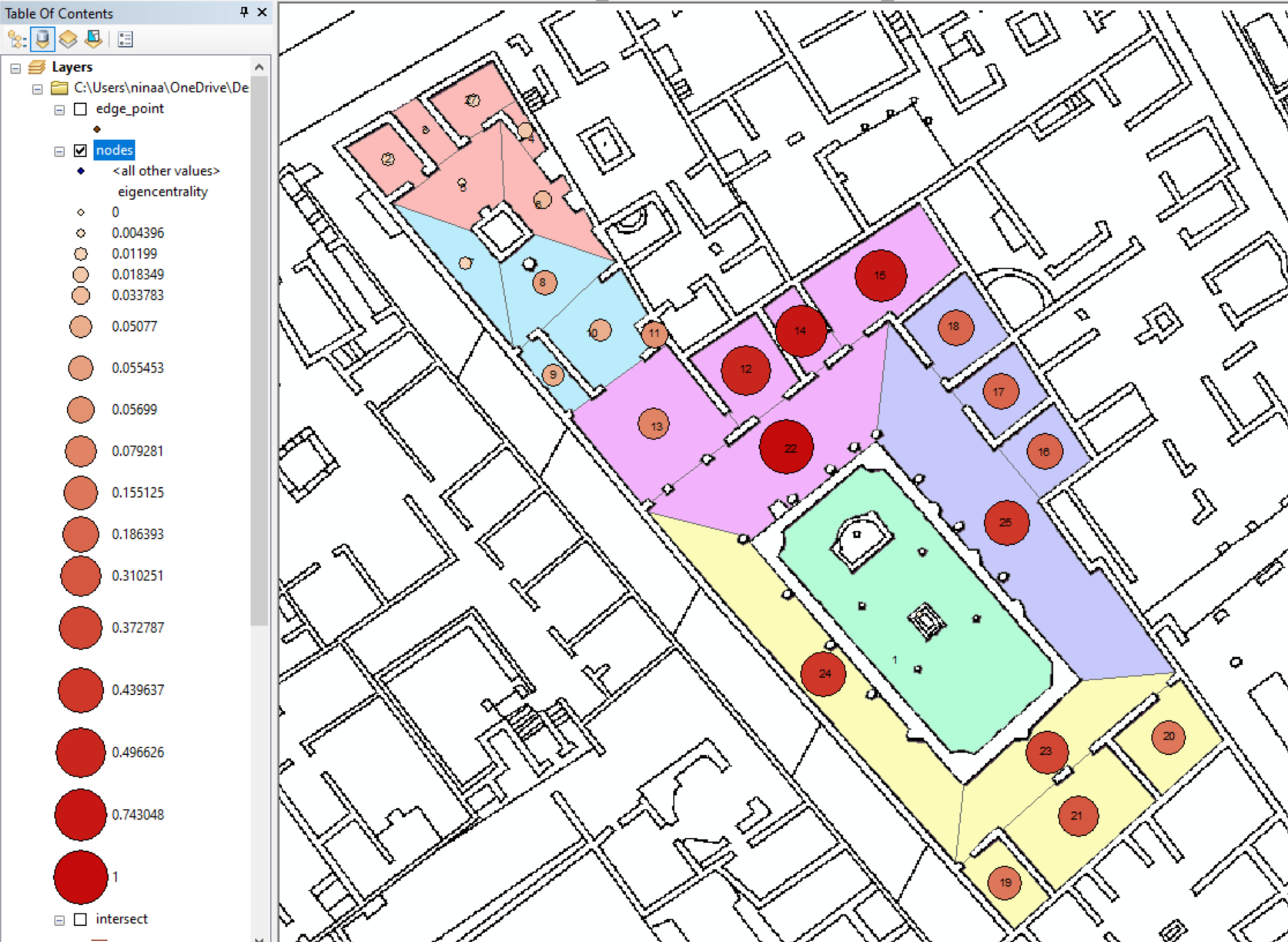

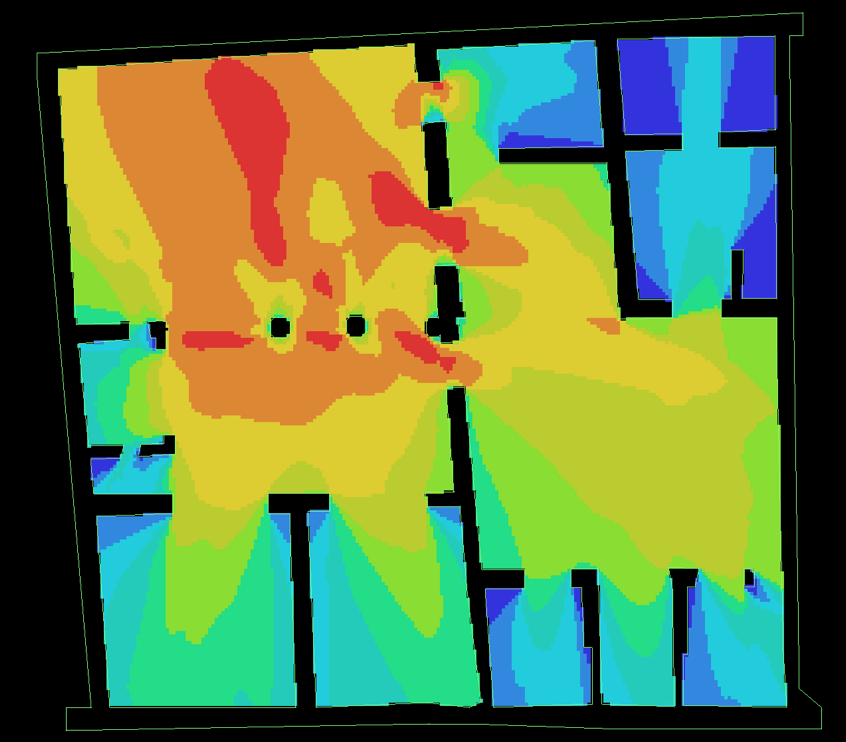

Predictive Modeling: Network Spatial Analysis and Visual Integration

Traditional Space Syntax Analysis (SSA) has been widely applied in archaeology, and in the study of contemporary buildings, to generate hypotheses about how people move through built space. As a fundamental approach, SSA views the rooms and doors of a structure as nodes and edges, generating a network graph. At the same time, a visibility graph of the structure is generated, visualizing as a heat map which spaces within the structure are most/least visible--with the attendant assumption that people tend to go in a structure to those spaces where they can see the most. VRP updates SSA to a form of Spatial Network Analysis, using familiar network measures (betweenness, closeness, eigenvector, hub, authority) together with visual integration heatmaps to generate predictions about which paths players will take through the models. For Pompeii more broadly, Spatial Network Analysis and visual integration heatmaps can be used to develop a descriptive, data-driven profile of each space in Pompeii, which can then be matched with the complexity, visual interest, and thematic content of its decoration.

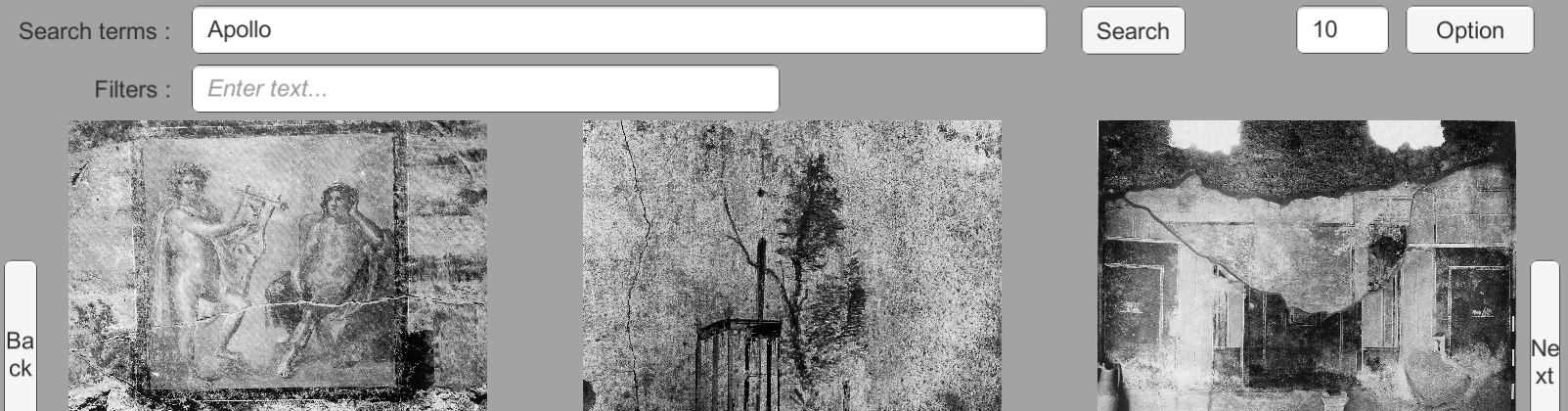

Pompei: pitture e mosaici - Convolutional Neural Network and Natural Language Processing

Working together with the University of Arkansas Libraries, VRP has digitized all 11 volumes of Pompei: pitture e mosaci, the most significant archival source for research on frescoes and mosaics in Pompeii. This was an essential first step toward creating an interactive software tool to navigate this critical dataset. As part of her Ph.D. research, Cindy Roullet has created a working prototype of this tool, PPMExplorer (PPM-X). Based on adaptations of the Inception 3 and Inception ResNet v2 Convolutional Neural Networks, Cindy has used transfer learning to develop an image search capability for PPM-X based on similarity. At the same time, she has used Natural Language Processing to develop a text search capability for the captions of PPM also based on similarity. PPM-X thus allows users to search images and captions together to track similar content (animals, myths, gods, objects, etc) across the corpus. PPM-X was also developed in Unity, and will be public facing as a webGL application. Integrated into VRP, PPM-X will allow users to visualize content in PPM against spatial types: what kind of decoration, with what level of complexity, in what kind of space.

Project Outcomes

Publications:

C. Roullet, D. Fredrick, J. Gauch, and R. G. Vennarucci. 2019. "An Automated Technique to Recognize and Extract Images from Scanned Archaeological Documents," in 2019 International Conference on Document Analysis and Recognition Workshops (ICDARW), Sydney, Australia, 20-25 September, 2019. Los Alamitos, CA: IEEE Computer Society. 6 pages.

Presentations:

Roullet, C., D. Fredrick, J. Gauch, and R. G. Vennarucci, “An Automated Technique to Recognize and Extract Images from Scanned Archaeological Documents.” 15th International Conference on Document Analysis and Recognition, Sydney, Australia, 20-25 September, 2019.

Fredrick, D., R. G. Vennarucci, “This is Your Brain on Space: Holistic Environmental Modeling in Ancient Pompeii.” Parthenon2: Digital Approaches to Architectural Heritage, Nashville, TN., 28-30 March, 2019.

Fredrick, D., R. G. Vennarucci, Y.-C. Wang, X. Liang, and D. Zigelsky, “This Is Your Brain on Space: Leveraging Neurocartography to Understand Spatial Cognition in Pompeian Houses.” 120th annual meeting of the AIA, San Diego, CA, 3-6 January, 2019.

Fredrick, D. and R. G. Vennarucci, “Cognitive Mapping in Ancient Pompeii.” 46th annual meeting of the CAA, Tübingen, Germany, 19-23 March, 2018.

Team Members

Dave Fredrick - Principle Investigator, Project Lead, and Content Expert

Rhodora Vennarucci - Co-director and Research and Content Expert

Will Loder - Project Manager

Cindy Roullet - CNN Main Engineer

Adam Schoelz - Main Project Engineer

Chloe Hiley - Main Environment Artist

Mika Moore - General and Plant Artist

Ian Ruiz - Unity Project Engineer

Matthew Brooke - Unity Project Engineer

Jenny Wong - Testing Designer and Researcher

Claire Campbell - ArcMap Technician, Researcher, and Texturing Support Artist

Rachel Murray - ArcMap Technician, Researcher, and Testing Designer

Kelsey Myers - ArcMap Technician

Nina Andersen - Former ArcMap Technician, Researcher, and Texturing Support Artist